Prompt engineering is a critical aspect of working with large language models (LLMs) like GPT-3 & 4, BERT, and T5. The quality of the prompts can greatly influence the accuracy, relevance, and coherence of the generated responses. In this article, we will discuss some effective prompt techniques for different AI models, creating AI agents, and AI applications.

- Detailed Prompts: For models like GPT-3 & 4, which are designed to generate long and detailed responses, providing a well-structured and detailed prompt can help the model understand the context better. The prompt should include important information about the topic, the objective, and any constraints or limitations. For example, when generating a summary of an article, the prompt might include the title, author, publication date, and a brief overview of the content.

- Complex Prompts: Models like GPT-3 & 4 and T5 are capable of handling complex and multifaceted prompts. These prompts should include multiple entities, relationships, and contextual information to help the model generate responses that are more relevant and informative. For instance, when creating a chatbot for a hotel, the prompt might include information about the location, amenities, room types, and services offered.

- Structured Prompts: Structured prompts are especially useful for training and fine-tuning LLMs for specific tasks or domains. They provide a clear framework for the model to learn from and generate responses that adhere to the intended structure. For example, when training a model for translation, the prompts might include pairs of sentences in different languages with corresponding meanings.

- Conditional Prompts: Conditional prompts involve including additional conditions or constraints within the main prompt to guide the model’s output. For example, when generating a restaurant recommendation, the prompt might include information about the cuisine type, budget, location, and preferences (vegetarian, vegan, gluten-free, etc.).

- Negative Example Prompts: Negative example prompts are useful for training models to avoid generating inappropriate or offensive content. These prompts should include examples of what not to generate or include, such as inappropriate language, biased statements, or incorrect information.

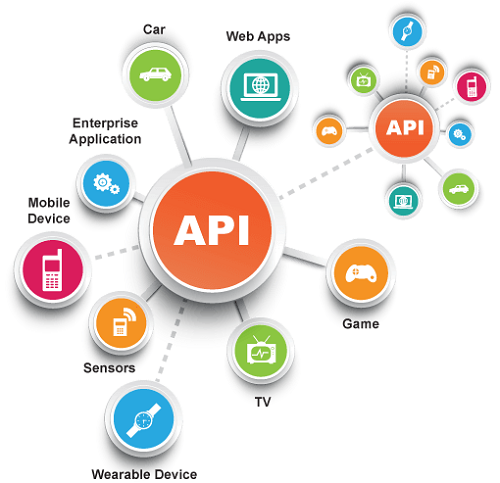

To create AI agents, developers typically use a combination of programming and natural language understanding (NLU) tools. The process involves defining the input and output formats, training the model on relevant data, and integrating it with the desired application or platform. Popular tools for creating AI agents include Dialogflow, Microsoft Bot Framework, and Google Dialogflow.

In conclusion, prompt engineering is a crucial aspect of working with AI models like GPT-3 & 4, BERT, and T5. Effective prompt techniques like detailed, complex, structured, conditional, and negative example prompts can greatly enhance the accuracy, relevance, and coherence of generated responses. By creating AI agents using these models and integrating them with various applications, businesses can automate tasks, improve decision-making, and enhance customer experience.