AI Coding Conundrum

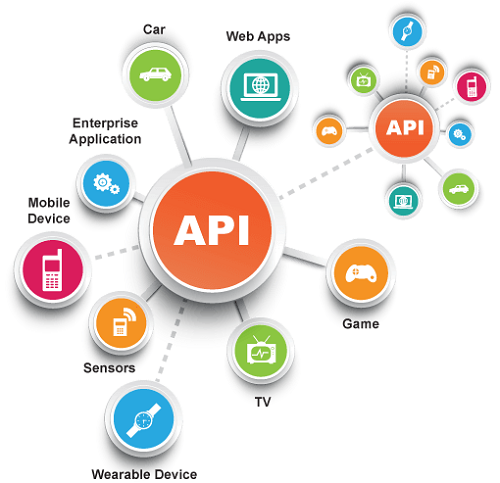

Large Language Models (LLMs) have revolutionized the way we code, allowing developers to generate code more quickly and efficiently. However, the question remains: are they trustworthy assistants? Unfortunately, the answer is no. LLMs often produce inconsistent responses, leaving developers to wonder if they can rely on the output. This is because LLMs are trained on vast amounts of data, but they don’t always understand the context or nuances of the code they are generating. As a result, developers may need to spend hours debugging and correcting errors, which can be frustrating and time-consuming.

In addition, the output of LLMs can be difficult to understand, even for experienced developers. The code may be generated quickly, but it may not be optimized or efficient, which can lead to performance issues or errors. This highlights the need for developers to have a deep understanding of coding principles and be able to recognize when AI-generated code is flawed. Without this knowledge, developers may be forced to spend more time fixing errors and re-writing code, which can be costly and time-consuming.

The Gap Between Good and Bad Code

Even skilled developers may struggle with LLM output. Without experience, it’s difficult to recognize and correct errors, leading to frustrating debugging sessions. This highlights the need for developers to have a deep understanding of coding principles and be able to recognize when AI-generated code is flawed. For example, an LLM may generate code that is syntactically correct but semantically incorrect, which can lead to errors or unexpected behavior. Experienced developers may be able to recognize these errors and correct them, but less experienced developers may not have the same level of expertise.

In addition, the output of LLMs can be difficult to understand, even for experienced developers. The code may be generated quickly, but it may not be optimized or efficient, which can lead to performance issues or errors. This highlights the need for developers to have a deep understanding of coding principles and be able to recognize when AI-generated code is flawed. Without this knowledge, developers may be forced to spend more time fixing errors and re-writing code, which can be costly and time-consuming.

The ‘Rough Edges’ of AI

AI models require developers to know where they can rely on the output and where they need to intervene to avoid errors. This can be a challenge, especially for less experienced developers. The key is to recognize the limitations of AI-generated code and apply it to tasks where it can be most effective. For example, AI-generated code may be suitable for tasks such as data processing or data analysis, but it may not be suitable for tasks that require complex decision-making or problem-solving.

In addition, AI models may not always understand the context or nuances of the code they are generating. For example, an LLM may generate code that is syntactically correct but semantically incorrect, which can lead to errors or unexpected behavior. Experienced developers may be able to recognize these errors and correct them, but less experienced developers may not have the same level of expertise.

The Great Debate: Trusting AI

Some developers argue that LLMs should be used with caution, while others believe they can be helpful even for less experienced developers. The truth lies somewhere in between. While AI can be a powerful tool, it’s essential to understand its limitations and use it in conjunction with human judgment and expertise. For example, AI-generated code may be suitable for tasks such as data processing or data analysis, but it may not be suitable for tasks that require complex decision-making or problem-solving.

In addition, AI models may not always understand the context or nuances of the code they are generating. For example, an LLM may generate code that is syntactically correct but semantically incorrect, which can lead to errors or unexpected behavior. Experienced developers may be able to recognize these errors and correct them, but less experienced developers may not have the same level of expertise.

The Sweet Spot: Knowing When to Use AI

Experienced developers can use AI effectively by recognizing its limitations and applying it to tasks they are familiar with. This sweet spot is where AI and human judgment come together, allowing developers to write better code more efficiently. For example, AI-generated code may be suitable for tasks such as data processing or data analysis, but it may not be suitable for tasks that require complex decision-making or problem-solving.

In addition, AI models may be able to assist developers with tasks such as code refactoring or code optimization, which can be time-consuming and error-prone. By using AI to generate code, developers can free up more time to focus on higher-level tasks, such as designing and testing software.

From Novices to Experts

Less experienced developers can still benefit from AI coding assistants, but may require more guidance and oversight. With the right training and mentorship, they can learn to use AI tools effectively and become proficient coders. For example, AI-generated code may be suitable for tasks such as data processing or data analysis, but it may not be suitable for tasks that require complex decision-making or problem-solving.

In addition, AI models may be able to assist developers with tasks such as code refactoring or code optimization, which can be time-consuming and error-prone. By using AI to generate code, developers can free up more time to focus on higher-level tasks, such as designing and testing software.

The Future of Coding: Human Judgment

The most effective developers will be those who can combine AI tools with their own judgment and expertise to write better code. In the future, AI will continue to play a role in coding, but human judgment will always be essential. By embracing this hybrid approach, developers can take their coding skills to the next level. For example, AI-generated code may be suitable for tasks such as data processing or data analysis, but it may not be suitable for tasks that require complex decision-making or problem-solving.

In addition, AI models may be able to assist developers with tasks such as code refactoring or code optimization, which can be time-consuming and error-prone. By using AI to generate code, developers can free up more time to focus on higher-level tasks, such as designing and testing software.